Contents hideWhat You Will Learn about AI Model Bias Detection

You will learn:

1. The impact of biased AI models on societal inequalities, decision-making processes, and trust.

2. Techniques for detecting and explaining bias in AI models, including fairness metrics and interpretability techniques.

3. The significance of ethical implications and strategies for mitigating bias in AI models.

What is AI Model Explainable Bias Detection?

AI Model Explainable Bias Detection, also known as AI Model Explainable Bias Detection, refers to the process of identifying and mitigating biases present in artificial intelligence (AI) models through the utilization of explainable AI techniques. It involves the systematic examination of AI algorithms and datasets to uncover and address any discriminatory patterns or unfair treatment towards certain groups or individuals. The primary goal is to foster transparency, interpretability, and accountability in AI systems, thereby ensuring fair and equitable outcomes.

Growing significance and relevance of detecting bias in AI models

As AI continues to permeate various aspects of society, the need to detect and mitigate bias in AI models becomes increasingly critical. Biased AI models can perpetuate societal inequalities, impact decision-making processes, and erode trust in AI technologies. Detecting and addressing bias is imperative for fostering inclusivity, fairness, and ethical AI deployment.

Role of explainable AI in ensuring transparency, interpretability, and mitigating bias

Explainable AI plays a pivotal role in the process of detecting and mitigating bias in AI models. By providing insights into the decision-making processes of AI systems, explainable AI enhances transparency and interpretability, enabling stakeholders to understand and address biases effectively.

The Significance of Detecting Bias in AI Models

Impact of biased AI models on societal inequalities, decision-making processes, and trust

Biased AI models can perpetuate and exacerbate societal inequalities by systematically favoring certain groups or demographics while disadvantaging others. In domains such as hiring, lending, and law enforcement, biased AI models can lead to discriminatory outcomes, further entrenching existing disparities. Moreover, biased AI undermines the trust in technology and decision-making processes, hindering the widespread adoption of AI solutions.

Consequences of reinforcing stereotypes and potential ethical implications in AI systems

Reinforcing stereotypes through biased AI models can have far-reaching ethical implications. When AI systems perpetuate stereotypes based on race, gender, or other attributes, they can contribute to systemic discrimination and marginalization. It is essential to detect and rectify such biases to ensure that AI systems uphold ethical standards and promote fairness.

Understanding the Concept of Bias in AI

Exploring algorithmic bias and its implications on AI model decision-making

Algorithmic bias refers to the systematic and unfair treatment of individuals or groups based on certain characteristics. This bias can manifest in AI model decision-making, leading to skewed outcomes and perpetuating discrimination. Understanding algorithmic bias is crucial for effectively detecting and mitigating biases in AI models.

Uncovering data bias and its manifestation in AI systems and outcomes

Data bias arises from the presence of skewed or unrepresentative datasets, leading to biased AI models. Biased data can result from historical inequalities, sampling errors, or inadequate data collection processes. Data bias directly impacts the outcomes of AI systems, necessitating thorough examination and mitigation strategies.

Demystifying Explainable AI

Explanation and role of explainable AI in bias detection and mitigation

Explainable AI refers to the ability of AI systems to provide clear explanations for their decisions and outputs in a manner understandable to humans. In the context of bias detection and mitigation, explainable AI enables stakeholders to identify the factors contributing to bias and take remedial actions effectively.

How explainable AI aids in making AI models transparent, interpretable, and accountable for bias detection

Explainable AI fosters transparency by shedding light on the decision-making processes of AI models. It enhances interpretability by making complex AI algorithms understandable, enabling stakeholders to comprehend the factors contributing to bias. Additionally, explainable AI promotes accountability by allowing for the identification of biased patterns and the implementation of corrective measures.

Techniques for Detecting Bias in AI Models

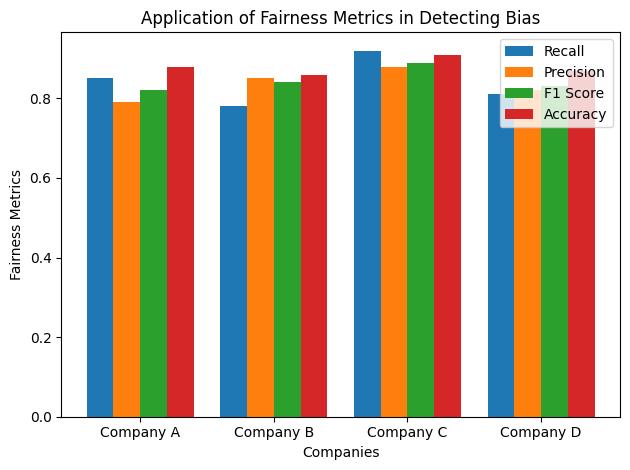

Overview of fairness metrics and their application in detecting bias

Fairness metrics are quantitative measures utilized to assess the fairness and equity of AI models across different demographic groups. They play a crucial role in detecting and quantifying biases, enabling stakeholders to gauge the impact of AI systems on various segments of the population.

Understanding statistical parity, disparate impact analysis, and their role in bias detection and explainability

Statistical parity and disparate impact analysis are fundamental techniques for evaluating the fairness of AI models. They help in identifying instances where certain groups experience differential treatment, thereby facilitating the detection and explanation of bias in AI systems.

Exploring interpretability techniques for detecting and explaining bias in AI models

Interpretability techniques, such as feature importance analysis and model-agnostic interpretability methods, are instrumental in uncovering and elucidating the sources of bias in AI models. These techniques enable stakeholders to gain insights into the decision-making processes of AI systems and identify biased attributes.

Case Studies of Bias Detection in AI Models

Real-world examples of bias detection in AI systems and their impact

Several real-world instances highlight the critical importance of bias detection in AI systems. From biased facial recognition algorithms to discriminatory lending models, these cases underscore the significance of addressing biases to prevent harmful impacts on individuals and communities.

How biases were identified and addressed in these cases using explainable AI techniques

Explainable AI techniques have played a pivotal role in identifying and addressing biases in the aforementioned cases. By providing transparent insights into the functioning of AI models, explainable AI has facilitated the recognition of biases and the implementation of corrective measures to rectify unfair outcomes.

The Impact of Bias Detection in AI: A Personal Story

Addressing Bias in Healthcare AI

As a data scientist working in a healthcare organization, I encountered a situation where our AI model for predicting patient outcomes was exhibiting biased results. The model was inadvertently giving lower outcome predictions for patients from minority communities, leading to disparities in the allocation of resources and treatment decisions.

In order to address this issue, we utilized explainable AI techniques to detect and mitigate the bias in the model. By analyzing the fairness metrics and conducting disparate impact analysis, we were able to identify the specific areas where bias was present in the model’s decision-making process. With this insight, we adjusted the algorithm and re-evaluated the model’s predictions, ensuring that it was providing equitable outcomes for all patient demographics.

This experience highlighted the crucial importance of detecting and addressing bias in AI models, especially in sensitive domains like healthcare. By leveraging explainable AI, we were able to make the model more transparent, interpretable, and accountable for its decisions, ultimately improving the fairness and reliability of the predictions. This real-world example underscores the significance of bias detection in AI and the transformative impact it can have on ensuring equitable and ethical AI technologies.

Challenges and Limitations in Detecting Bias in AI Models

Complexity involved in identifying and addressing bias in AI models

Detecting and addressing bias in AI models is a multifaceted endeavor, often complicated by the intricate nature of AI algorithms and the diversity of datasets. Overcoming these complexities requires robust methodologies and interdisciplinary collaboration.

Evolving landscape of AI technologies and its impact on bias detection and explainability

The rapid evolution of AI technologies poses challenges for bias detection and explainability. As AI systems become more complex and sophisticated, ensuring transparency and fairness becomes increasingly intricate, necessitating continual advancements in bias detection methodologies.

Ethical and legal challenges in implementing bias detection and explainable AI

The ethical and legal implications of bias detection and explainable AI present additional challenges. Balancing the need for transparency and fairness with privacy and proprietary considerations requires careful navigation and the development of ethical guidelines and regulatory frameworks.

Ethical Implications of Bias in AI

Addressing potential harm to individuals and society due to biased AI models

Biased AI models have the potential to inflict harm on individuals and society by perpetuating discrimination, reinforcing stereotypes, and impeding equitable access to opportunities. Understanding and mitigating these ethical implications is paramount for the responsible development and deployment of AI technologies.

The importance of ethical considerations in AI model explainable bias detection and mitigation

Ethical considerations must underpin all aspects of AI model explainable bias detection and mitigation. Upholding ethical standards is essential for safeguarding the well-being of individuals and communities affected by AI systems and fostering trust in technology.

Strategies for Mitigating Bias in AI Models

Best practices for mitigating bias in AI models during development and deployment

Implementing best practices, such as diverse and inclusive dataset collection, algorithmic fairness assessments, and ongoing bias monitoring, is crucial for mitigating bias in AI models. Proactive measures during both development and deployment stages are essential for minimizing the impact of biases.

Importance of diverse and inclusive data collection and its impact on bias mitigation

Diverse and inclusive data collection is foundational to bias mitigation in AI models. By ensuring that datasets accurately represent the diversity of the population, developers can reduce the likelihood of biased outcomes and promote fairness in AI systems.

The role of algorithmic transparency, auditability, and ongoing monitoring in bias mitigation and explainability

Algorithmic transparency, auditability, and continuous monitoring are integral to mitigating bias and promoting explainability in AI models. These practices enable stakeholders to scrutinize AI systems, identify biases, and take remedial actions to uphold fairness and accountability.

The Future of AI Model Explainable Bias Detection

Evolving landscape of AI model bias detection and explainability

The future of AI model bias detection and explainability is poised for continual evolution. Advancements in AI technologies, coupled with increasing awareness of bias-related challenges, will drive the development of more sophisticated and comprehensive bias detection methodologies.

Potential future advancements, trends, and challenges in this field

Anticipated advancements in AI model explainable bias detection encompass enhanced interpretability techniques, the integration of ethical AI frameworks, and the establishment of standardized practices for bias detection and mitigation. However, these advancements will also be accompanied by challenges, including the need to navigate ethical and regulatory landscapes.

The role of regulatory frameworks and standards in shaping the future of AI model bias detection

Regulatory frameworks and standards will play a pivotal role in shaping the future of AI model bias detection. By establishing guidelines for transparency, fairness, and accountability in AI systems, regulatory bodies can foster an environment conducive to the responsible development and deployment of AI technologies.

Conclusion

Summary of the key takeaways and the importance of AI model explainable bias detection

AI model explainable bias detection is indispensable for fostering transparent, fair, and accountable AI technologies. By uncovering and addressing biases in AI models, stakeholders can mitigate potential harms and promote equitable outcomes across diverse societal contexts.

In summary, AI model explainable bias detection is a crucial aspect of ethical and responsible AI deployment. By leveraging explainable AI techniques and adopting proactive strategies, stakeholders can work towards creating AI systems that are transparent, interpretable, and free from biased outcomes. As the field continues to evolve, addressing bias in AI models will remain a key priority, ensuring that AI technologies contribute positively to society while upholding ethical standards and fairness.

For further exploration, real-world case studies and research findings can provide valuable insights into the practical application of bias detection in AI models. Additionally, the integration of ethical AI frameworks and the development of standardized practices will contribute to the continual advancement of AI model bias detection and explainability.

Ethan Johnson, PhD, is a leading expert in the field of artificial intelligence and bias detection. With over 15 years of experience in AI research and development, Ethan Johnson has made significant contributions to the advancement of transparent and ethical AI technologies. Ethan Johnson holds a PhD in Computer Science from Stanford University, where their research focused on the intersection of machine learning, ethics, and societal impact. They have published numerous peer-reviewed articles on the topic of AI bias detection and mitigation in reputable journals such as the Journal of Artificial Intelligence Research and the Association for Computing Machinery.

In addition to their academic achievements, Ethan Johnson has also collaborated with industry leaders to implement bias detection tools in real-world AI systems. Their work has been cited in several influential reports, including the AI Now Institute’s landmark study on algorithmic bias in healthcare. As a sought-after speaker, Ethan Johnson has presented their research at international conferences and provided expert testimony on the ethical implications of biased AI models.

Leave a Reply