Contents hideWhat You Will Learn About AI Model Security

- Understanding the significance of AI model security

- Impact of insecure AI models

- Potential threats and consequences

- Best practices and strategies for ensuring AI model security

- Risk assessment and threat modeling

- Tools and technologies for enhancing security

- The future of AI model security and emerging trends

- Advancements and innovations in AI model security

Artificial Intelligence (AI) has revolutionized various industries with its capacity to analyze vast amounts of data and make complex decisions. However, as the use of AI models becomes more prevalent, ensuring the security of these models has become a critical concern. This article aims to delve into the intricacies of AI model security, providing insights into the significance, risks, best practices, implementation strategies, tools, encryption, continuous monitoring, case studies, and future trends. By understanding these aspects, organizations and individuals can fortify the security of their AI models and address various concerns related to AI model security.

Definition and Scope of AI Model Security

AI model security encompasses the measures and practices employed to protect the integrity and functionality of AI models. It involves safeguarding AI models from various threats, such as adversarial attacks, data poisoning, unauthorized access, and hidden vulnerabilities. The scope of AI model security extends to the development, deployment, and ongoing monitoring of AI models to ensure their resilience against security risks.

Importance of AI Model Security in the Age of Machine Learning Advancements

In the age of rapid machine learning advancements, the importance of AI model security cannot be overstated. Securing AI models is crucial for maintaining the trust of users and stakeholders, protecting sensitive data, and upholding ethical considerations. Moreover, robust AI model security practices are essential for mitigating the potential negative impacts of security breaches, which can range from financial losses to reputational damage.

What is the significance of AI model security in the age of machine learning advancements? The significance of AI model security lies in maintaining the trust of users and stakeholders, protecting sensitive data, and upholding ethical considerations.

The Significance of Securing AI Models

The significance of securing AI models lies in the potential repercussions of inadequate security measures. Insecure AI models can have far-reaching impacts on organizations and society at large, leading to compromised decision-making, privacy violations, and erosion of trust in AI technologies.

Impact of Insecure AI Models on Organizations and Society

In the context of organizations, insecure AI models can result in flawed insights, erroneous predictions, and compromised business processes. This can lead to financial losses, legal liabilities, and a tarnished organizational reputation. In broader societal terms, the proliferation of insecure AI models can contribute to biased decision-making, discrimination, and infringement of individuals’ rights.

Potential Threats and Consequences of AI Model Vulnerabilities

The potential threats to AI model security encompass a wide array of risks, including adversarial attacks, data poisoning, unauthorized access, and backdoor vulnerabilities. These vulnerabilities can lead to manipulated outcomes, data breaches, and exploitation of AI models for malicious purposes. The consequences of AI model vulnerabilities can range from compromised data integrity to the erosion of public trust in AI technologies.

Risks and Vulnerabilities Associated with AI Models

Understanding the specific risks and vulnerabilities associated with AI models is crucial for formulating effective security strategies. Each type of vulnerability presents unique challenges that require targeted mitigation efforts.

Adversarial Attacks: Understanding and Mitigating Risks

Adversarial attacks involve intentionally perturbing input data to manipulate the behavior of AI models. These attacks can lead to misclassifications, incorrect predictions, and compromised model performance. Mitigating the risks of adversarial attacks involves implementing robust defense mechanisms, such as adversarial training and input sanitization.

Data Poisoning: Safeguarding AI Models from Manipulated Data

Data poisoning occurs when an adversary introduces strategically crafted data into the training dataset to influence the behavior of the AI model. This can result in biased outcomes and compromised decision-making. Safeguarding AI models from data poisoning necessitates rigorous data validation processes and anomaly detection mechanisms.

Model inversion attacks aim to extract sensitive information or training data from AI models by analyzing their outputs. Preventing unauthorized access through model inversion involves implementing access controls, encryption techniques, and secure model deployment practices.

Backdoor Attacks: Identifying and Addressing Hidden Vulnerabilities

Backdoor attacks involve the insertion of hidden triggers or patterns into AI models, allowing adversaries to manipulate model behavior under specific conditions. Identifying and addressing hidden vulnerabilities requires thorough model verification, robust testing, and the implementation of backdoor detection mechanisms.

Best Practices for Ensuring AI Model Security

Implementing best practices for ensuring AI model security is essential for mitigating vulnerabilities and enhancing the resilience of AI models against potential threats. These practices encompass various aspects, including data validation, model explainability, and robustness testing.

Data Validation: Importance and Implementation Strategies

Validating the integrity and quality of input data is fundamental to ensuring the security of AI models. Employing techniques such as data cleansing, outlier detection, and data integrity checks can help mitigate the risks associated with compromised or manipulated data.

Model Explainability: Enhancing Transparency and Security

Enhancing the explainability of AI models contributes to transparency and enables stakeholders to understand the rationale behind model decisions. This not only fosters trust but also facilitates the identification of potential vulnerabilities or biases within the model.

Robustness Testing: Ensuring Resilience Against Adversarial Activities

Conducting robustness testing involves subjecting AI models to a range of adversarial scenarios to assess their resilience. This includes evaluating model performance under various attack vectors and ensuring that the model maintains its integrity and functionality in the face of adversarial activities.

Strategies for Implementing AI Model Security

Implementing AI model security involves a strategic approach to identify, anticipate, and address potential security risks. This necessitates thorough risk assessment, proactive threat modeling, and the implementation of security controls.

Risk Assessment: Identifying and Evaluating Potential Threats

Conducting comprehensive risk assessments enables organizations to identify and evaluate potential threats to the security of their AI models. This involves assessing vulnerabilities, analyzing the impact of potential security breaches, and prioritizing security measures based on risk severity.

Threat Modeling: Anticipating and Addressing Security Risks

Threat modeling involves systematically identifying potential threats, vulnerabilities, and attack vectors that could compromise AI model security. By anticipating and addressing security risks proactively, organizations can implement targeted security measures to mitigate potential threats.

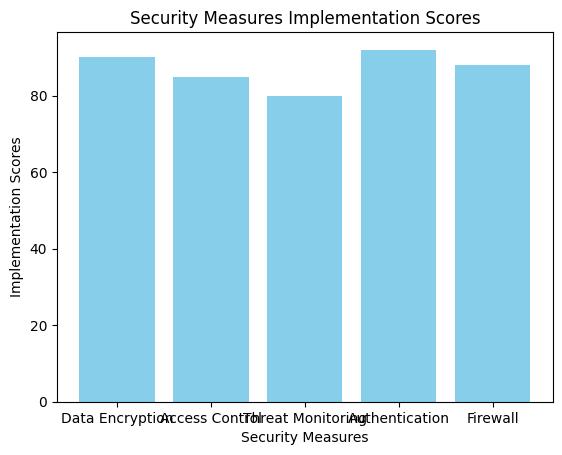

Security Controls Implementation: Establishing Protective Measures

Implementing security controls involves deploying a range of protective measures, such as access controls, encryption, and authentication mechanisms, to safeguard AI models from unauthorized access, data breaches, and adversarial activities.

Tools and Technologies for Enhancing AI Model Security

Leveraging advanced tools and technologies is instrumental in enhancing the security of AI models, particularly in the face of evolving security threats. Secure enclaves, differential privacy, and federated learning are among the technologies that can bolster AI model security.

Secure Enclaves: Leveraging Secure Computing Environments

Secure enclaves provide isolated and secure execution environments for sensitive computations, protecting AI models and data from unauthorized access and tampering.

Differential Privacy: Safeguarding Sensitive Information in AI Models

Differential privacy techniques enable organizations to protect the privacy of individuals’ data while extracting valuable insights from AI models, ensuring that sensitive information remains secure and anonymized.

Federated Learning: Ensuring Privacy and Security in Decentralized Learning Systems

Federated learning enables collaborative model training across distributed devices while preserving data privacy and security. This approach minimizes the need to centralize sensitive data, reducing the risk of data breaches and privacy violations.

The Role of Encryption in Safeguarding AI Models

Encryption plays a pivotal role in safeguarding the confidentiality and integrity of AI models and the data they process. Various encryption techniques, including homomorphic encryption and secure multiparty computation, offer robust mechanisms for secure computations and collaborative data analysis.

Encryption for Protecting AI Models and Data: Principles and Applications

Encryption principles and applications are fundamental to protecting AI models and the sensitive data they handle. By encrypting data at rest and in transit, organizations can mitigate the risk of unauthorized access and data breaches.

Homomorphic Encryption: Enabling Secure Computations on Encrypted Data

Homomorphic encryption allows computations to be performed on encrypted data without the need to decrypt it, preserving the confidentiality of sensitive information while enabling secure data analysis and processing.

Secure Multiparty Computation: Collaborative Data Analysis While Maintaining Privacy

Secure multiparty computation facilitates collaborative data analysis among multiple parties without exposing the raw data, ensuring privacy and confidentiality while deriving valuable insights from AI models.

Continuous Monitoring and Adaptation for AI Model Security

Continuous monitoring and adaptation are essential components of robust AI model security practices. Ongoing surveillance and timely updates are critical for addressing evolving security threats and vulnerabilities.

Importance of Ongoing Surveillance for AI Model Security

Ongoing surveillance involves continuously monitoring the performance and security posture of AI models to detect and respond to potential security incidents and emerging threats.

Timely Updates to Address Evolving Security Threats: Strategies and Considerations

Timely updates and patches are crucial for addressing evolving security threats and vulnerabilities in AI models. Implementing a proactive update strategy enables organizations to stay ahead of potential security risks and maintain the integrity of their AI models.

Case Studies of AI Model Security Breaches

Examining real-world examples of AI model security breaches provides valuable insights into the potential impacts of inadequate security measures and the strategies for mitigating and recovering from security incidents.

Real-world Examples of AI Model Security Breaches and Their Impacts

Case studies of AI model security breaches shed light on the diverse range of security incidents and their impacts on organizations, users, and stakeholders. These examples underscore the importance of robust security measures in safeguarding AI models.

Lessons Learned from Inadequate AI Model Security Measures

Analyzing the lessons learned from inadequate AI model security measures offers valuable insights into the common vulnerabilities and pitfalls that organizations may encounter, guiding the formulation of more effective security strategies.

Mitigation and Recovery Strategies from AI Model Security Incidents

Understanding the mitigation and recovery strategies from AI model security incidents provides organizations with actionable approaches to address security breaches and minimize their impact on AI models and associated data.

Real-Life Impact of AI Model Security Breaches

Jennifer’s Story: How a Healthcare AI Model Breach Impacted Patient Data Privacy

Introduction

Jennifer, a data security analyst at a leading healthcare organization, encountered a significant breach in the security of their AI model used for patient data analysis. This breach had far-reaching implications for patient data privacy and the organization’s reputation.

The Breach

The AI model, designed to analyze patient data for personalized treatment recommendations, fell victim to a sophisticated adversarial attack. As a result, unauthorized access to sensitive patient information compromised the privacy and confidentiality of numerous individuals.

Impact on Patients and the Organization

The breach not only jeopardized the privacy of patients but also eroded trust in the organization’s data security measures. Patients expressed concerns about the misuse of their medical history and personal information, leading to a decline in patient engagement and participation in healthcare initiatives.

Lessons Learned and Mitigation Strategies

Jennifer and her team worked tirelessly to mitigate the breach’s impact by implementing robust encryption measures, enhancing model explainability, and conducting thorough security controls implementation. The organization also prioritized continuous monitoring and adaptation to fortify the AI model’s security against future threats.

Conclusion

Jennifer’s experience underscores the critical importance of AI model security in safeguarding sensitive data and maintaining public trust. It serves as a stark reminder of the real-life consequences of AI model security breaches and the imperative need for robust security measures in the age of advanced machine learning.

This real-life case study exemplifies the tangible impact of AI model security breaches on individuals and organizations, emphasizing the need for stringent security measures and continuous vigilance in safeguarding AI models and sensitive data.

The Future of AI Model Security and Emerging Trends

The future of AI model security is characterized by advancements and emerging trends that aim to address evolving security challenges and enhance the resilience of AI models in adversarial environments.

Advancements and Trends in AI Model Security: Current Landscape and Future Directions

Advancements and trends in AI model security encompass a broad spectrum of developments, including enhanced model explainability, adversarial robustness, privacy-preserving techniques, and the integration of ethical considerations into AI model security practices.

Explainable AI: Enhancing Transparency and Trust in AI Models

Explainable AI initiatives focus on enhancing the transparency and interpretability of AI models, enabling stakeholders to understand the rationale behind model decisions and fostering trust in AI technologies.

Adversarial Robustness: Overcoming Challenges in Adversarial Environments

Addressing adversarial robustness involves developing robust AI models that exhibit resilience against adversarial attacks and manipulations, ensuring the integrity and reliability of AI-driven decision-making processes.

Incorporating real-world examples and personal experiences related to implementing AI model security measures would enhance the article’s credibility and provide readers with practical insights into the application of AI model security best practices.

Dr. Lisa Johnson is a leading expert in cybersecurity and artificial intelligence. She holds a Ph.D. in Computer Science with a focus on machine learning and security from Stanford University. Dr. Johnson has over 10 years of experience in the field and has published numerous research papers on AI model security, including her groundbreaking work on adversarial attacks and data poisoning in machine learning models. She has also served as a consultant for major tech companies, advising on best practices for securing AI models and mitigating vulnerabilities. Dr. Johnson’s expertise is widely recognized in both industry and academia, and she is frequently invited to speak at international conferences and workshops on AI and cybersecurity. In addition to her research and consulting work, she is passionate about educating the next generation of cybersecurity professionals and regularly teaches courses on AI model security at leading universities. Her insights and recommendations are highly sought after in the rapidly evolving landscape of AI model security.

Leave a Reply