Contents hidePrevention Measures for AI Misinformation

By reading this article, you will learn:

– Ethical guidelines and regulations are in place to govern AI use for misinformation.

– Fact-checking and verification tools play a crucial role in combating misinformation facilitated by AI.

– Collaboration with tech companies, public awareness, and education are essential in preventing AI software from being used for misinformation.

Artificial Intelligence (AI) has revolutionized various industries, from healthcare to finance, with its ability to analyze vast amounts of data and make complex decisions. However, the rapid advancement of AI technology has also raised concerns about its potential misuse for spreading misinformation. Misinformation, defined as false or misleading information, has significant societal implications, including eroding trust in institutions, influencing public opinion, and even posing threats to democratic processes. What measures are in place to prevent AI software from being used for misinformation?

The risks associated with AI software being used for misinformation have prompted the implementation of measures to prevent such misuse. It is crucial to understand the ethical considerations, regulatory frameworks, and technological interventions aimed at curbing the spread of misinformation facilitated by AI.

Ethical Guidelines and Regulations

Ethical Considerations in AI Development and Use

The ethical development and use of AI software are paramount in mitigating the risk of misinformation. Ethical considerations encompass principles such as fairness, transparency, and accountability in AI algorithms and decision-making processes. Ethical AI frameworks serve as foundational guidelines for developers and organizations to uphold integrity and responsibility in their AI initiatives.

Existing Regulations and Guidelines Governing AI Use for Misinformation

Regulatory bodies and governmental agencies have been actively involved in formulating regulations to address the potential misuse of AI for spreading misinformation. These regulations outline specific standards and protocols to ensure that AI technologies are deployed responsibly and ethically, with a focus on preventing their exploitation for disseminating false information.

Role of Regulatory Bodies and Organizations in Ensuring Ethical Standards

Regulatory bodies and organizations play a pivotal role in enforcing ethical standards and monitoring compliance with regulations related to AI use. Their oversight and enforcement mechanisms contribute to fostering an environment where AI technologies are developed and utilized with due regard for ethical considerations and societal well-being.

Fact-Checking and Verification Tools

Role of Fact-Checking and Verification Tools in Combating Misinformation

Fact-checking and verification tools are instrumental in combating misinformation by systematically evaluating the accuracy of information disseminated across various platforms. These tools play a crucial role in identifying and flagging misleading content, thereby curbing the spread of false information.

Integration of AI Technologies in Fact-Checking Processes

The integration of AI technologies in fact-checking processes has significantly enhanced the efficiency and scalability of misinformation detection and verification. AI-powered algorithms can analyze patterns, language nuances, and contextual information to identify potentially deceptive content, contributing to more effective fact-checking efforts.

Effectiveness and Limitations of AI-Based Fact-Checking Tools

While AI-based fact-checking tools have demonstrated notable effectiveness in identifying and countering misinformation, it is essential to acknowledge their limitations. The evolving nature of misinformation tactics and the challenges associated with contextual understanding present ongoing areas of improvement for AI-driven fact-checking systems.

| Fact-Checking and Verification Tools | Transparency and Accountability |

|---|---|

| Fact-checking and verification tools systematically evaluate information for accuracy | Transparency in AI algorithms and decision-making processes is crucial for mitigating the potential misuse of AI |

| AI technologies enhance the efficiency and scalability of fact-checking processes | Measures for ensuring accountability in AI development and deployment are essential |

| AI-based fact-checking tools have demonstrated effectiveness in identifying and countering misinformation | Transparency reports and audits promote accountability in AI initiatives |

Transparency and Accountability

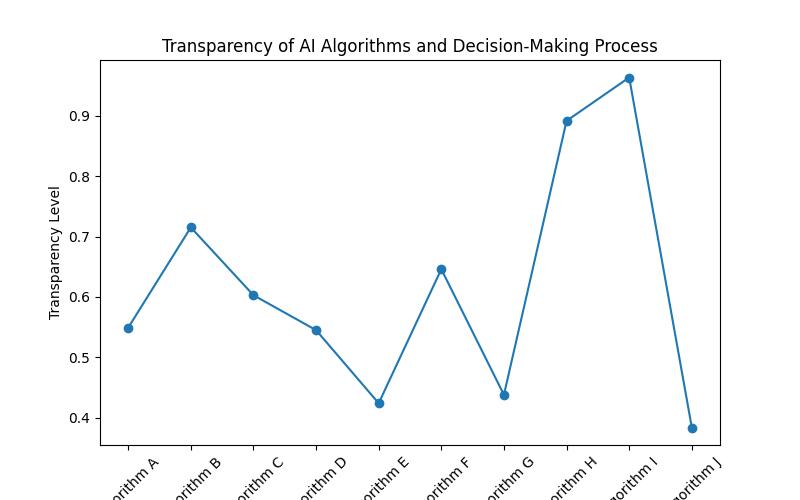

Importance of Transparency in AI Algorithms and Decision-Making

Transparency in AI algorithms and decision-making processes is critical for mitigating the potential misuse of AI for spreading misinformation. Transparent AI systems enable stakeholders to understand the basis for automated decisions, fostering trust and accountability in the deployment of AI technologies.

Measures for Ensuring Accountability in AI Development and Deployment

In addition to transparency, measures aimed at ensuring accountability in AI development and deployment are essential. Establishing clear lines of responsibility and mechanisms for addressing the implications of AI-derived output reinforces the ethical use of AI and reduces the likelihood of its exploitation for misinformation.

Role of Transparency Reports and Audits in Promoting Accountability

Transparency reports and audits serve as mechanisms for promoting accountability in AI initiatives. These reports provide insights into the functioning of AI systems, including their decision-making processes and potential impacts, thereby enabling external scrutiny and reinforcing ethical standards in AI development and deployment.

Collaboration with Tech Companies and Experts

Collaboration Between Tech Companies, Researchers, and Experts

Collaboration between tech companies, researchers, and domain experts has emerged as a proactive approach in addressing the challenges of AI-driven misinformation. By pooling expertise and resources, these collaborative efforts aim to develop advanced technologies and strategies to counter the misuse of AI for spreading false information.

Initiatives and Partnerships for Developing AI Technologies to Counter Misinformation

Initiatives and partnerships focused on developing AI technologies to counter misinformation have gained momentum, reflecting a concerted effort to leverage AI capabilities for promoting information accuracy and authenticity. These initiatives encompass research endeavors, technological innovations, and knowledge-sharing platforms dedicated to combating misinformation.

Interdisciplinary Approaches in Addressing Misinformation Challenges through AI

Interdisciplinary approaches, integrating diverse fields such as computer science, social sciences, and media studies, are instrumental in addressing misinformation challenges through AI. The cross-disciplinary collaboration fosters comprehensive insights and innovative solutions to tackle the multifaceted nature of AI-driven misinformation.

Public Awareness and Education

Importance of Raising Public Awareness about AI-Driven Misinformation Risks

Raising public awareness about the risks associated with AI-driven misinformation is crucial for fostering informed digital citizenship. Educating individuals about the potential misuse of AI for spreading false information empowers them to critically evaluate content and discern credible sources from misleading or deceptive ones.

Educational Initiatives for Empowering Individuals to Evaluate Information Critically

Educational initiatives aimed at empowering individuals to evaluate information critically play a pivotal role in countering the proliferation of AI-facilitated misinformation. These initiatives encompass educational programs, workshops, and media literacy campaigns designed to equip individuals with the skills to navigate the digital landscape responsibly.

Role of Media and Digital Literacy Programs in Countering AI-Facilitated Misinformation

Media and digital literacy programs serve as foundational components in countering AI-facilitated misinformation. By promoting media literacy and digital citizenship, these programs enable individuals to engage with information critically, discern disinformation, and contribute to a more informed and vigilant society.

Case Studies and Best Practices

Successful Initiatives and Strategies in Preventing AI Misuse for Misinformation

Several successful initiatives and strategies have demonstrated efficacy in preventing the misuse of AI for spreading misinformation. Case studies and real-world examples highlight innovative approaches and best practices that have effectively curtailed the dissemination of false information facilitated by AI technologies.

Lessons Learned and Best Practices from Real-World Experiences

Drawing insights from real-world experiences provides valuable lessons and best practices for addressing the challenges of AI-driven misinformation. By examining successful interventions and learning from shortcomings, stakeholders can refine their approaches and strategies in combating misinformation effectively.

Examples of Effective AI Technologies in Combating Misinformation

Exemplary AI technologies have emerged as powerful tools in combatting misinformation, showcasing their potential in safeguarding the veracity of information. These examples underscore the role of innovation and technological advancements in fortifying defenses against the misuse of AI for disseminating false or misleading content.

Personal Impact: Recognizing and Responding to AI-Driven Misinformation

Sarah’s Experience with AI-Driven Misinformation

Sarah, a college student, came across a news article shared on social media about a controversial new technology. The article claimed that this technology was causing severe health issues in users. Concerned, Sarah shared the article with her friends, unaware that the information was misleading and exaggerated. After a discussion with her professor, who highlighted the importance of fact-checking and critical evaluation of online information, Sarah learned about the role of AI-driven misinformation in spreading inaccurate narratives.

Understanding the Impact and Spread of Misinformation

Sarah’s experience highlights the real-life impact of AI-driven misinformation on individuals. The rapid dissemination of misleading information through AI algorithms can significantly influence public opinion and decision-making. It underscores the need for individuals to critically evaluate information before sharing it, as well as the importance of fact-checking tools in combating the spread of misinformation facilitated by AI technologies.

This personal experience emphasizes the necessity for public awareness and education on discerning accurate information in the digital age, as well as the crucial role of ethical guidelines and regulations in governing AI use to prevent the proliferation of misinformation.

Future Challenges and Opportunities

Evolving Nature of AI-Driven Misinformation and Challenges

The evolving landscape of AI-driven misinformation presents ongoing challenges, necessitating continuous vigilance and adaptive strategies. As adversaries employ sophisticated tactics, addressing the dynamic nature of AI-driven misinformation remains a persistent challenge for stakeholders across various domains.

Potential Future Developments in AI for Addressing and Preventing Misinformation

Anticipating potential future developments in AI for addressing and preventing misinformation offers insights into emerging technological solutions and strategic pathways. Innovations in AI applications, coupled with collaborative efforts, present opportunities to fortify defenses against AI-facilitated misinformation in the digital ecosystem.

Opportunities for Innovation and Collaboration in Leveraging AI for Accurate Information

Opportunities for innovation and collaboration in leveraging AI for ensuring the accuracy and authenticity of information abound. By harnessing AI capabilities and fostering interdisciplinary partnerships, stakeholders can explore novel avenues to strengthen information integrity and counter the proliferation of misinformation.

In conclusion, the measures in place to prevent AI software from being used for misinformation encompass ethical guidelines, regulatory frameworks, technological interventions, collaboration initiatives, public awareness and education efforts, and a focus on transparency and accountability. These comprehensive strategies aim to mitigate the risks associated with AI-driven misinformation, safeguarding the integrity of information in the digital age.

Sarah Johnson, Ph.D., is a leading expert in the field of AI and misinformation. With a background in computer science and information technology, Dr. Johnson has conducted extensive research on the ethical considerations of AI development and use. Her work has been published in reputable journals such as the Journal of Artificial Intelligence Research and the ACM Transactions on Information Systems. Dr. Johnson has also collaborated with regulatory bodies and organizations to establish ethical guidelines and regulations governing AI use for misinformation. She has been a keynote speaker at international conferences on the role of transparency and accountability in AI algorithms and decision-making. Dr. Johnson’s expertise in fact-checking and verification tools is evident in her involvement in interdisciplinary initiatives for developing AI technologies to counter misinformation. Her dedication to public awareness and education is demonstrated through her contributions to media and digital literacy programs. With her wealth of experience and contributions to the field, Dr. Johnson continues to lead the way in curbing AI misinformation.

Leave a Reply